- Blog /

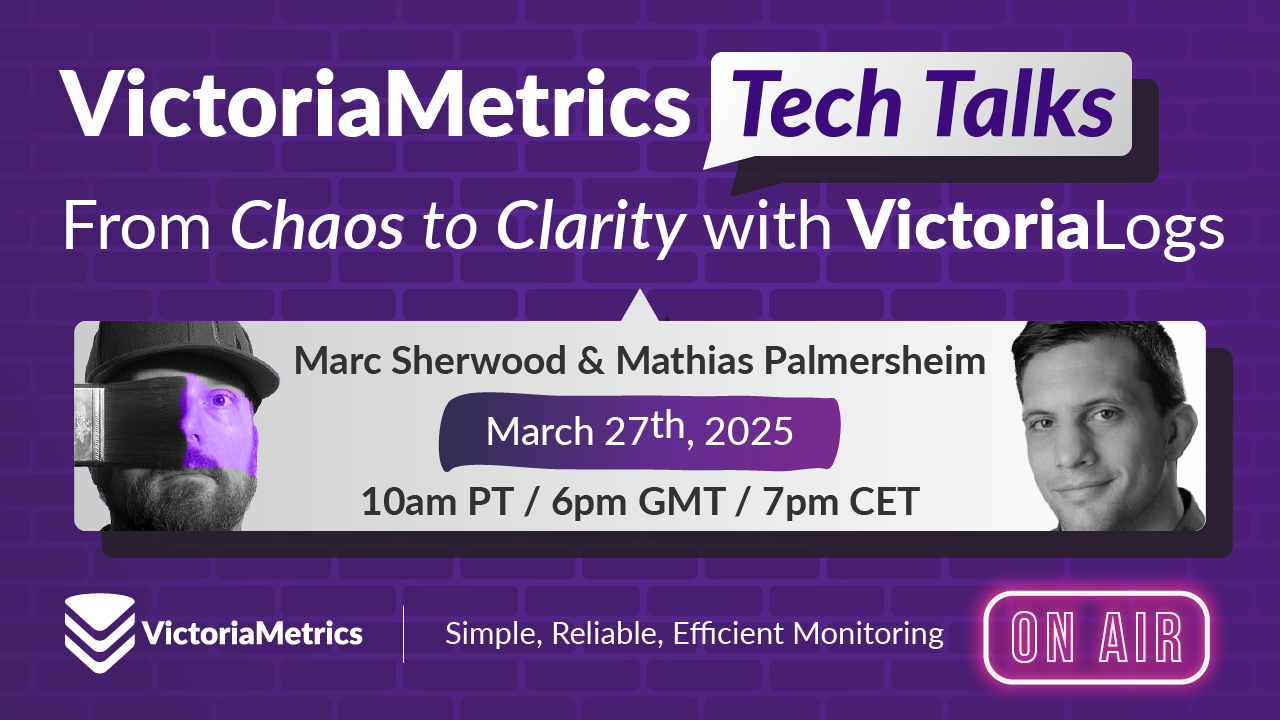

- From Chaos to Clarity with VictoriaLogs

From Chaos to Clarity with VictoriaLogs

Join the live stream

#

When: Thursday, March 27, 2025, at 10:00am PDT / 6pm GMT / 7pm CET

Link: https://www.youtube.com/watch?v=KbQcAoSZE

From Chaos to Control: The VictoriaLogs Approach

#

Log data is a treasure trove of information, but only if you can effectively manage and analyze it. Traditional logging solutions often struggle with:

- Volume: The sheer volume of logs generated by modern applications can be overwhelming, and expensive.

- Velocity: Logs are generated constantly, requiring real-time ingestion and processing.

- Variety: Logs come in various formats and from diverse sources, making standardization a challenge.

- Veracity: Ensuring the accuracy and reliability of log data is crucial for making informed decisions.

VictoriaLogs is designed to address these challenges head-on, offering an open source, powerful, scalable, and cost-effective solution for log management.

What We Cover (or, What You’ll Learn)

#

This session will cover the following key areas:

- Introduction to VictoriaLogs

- Brief overview of VictoriaLogs and its core features.

- Key benefits of using VictoriaLogs, including its performance, scalability, and cost-effectiveness compared to other solutions.

- How VictoriaLogs fits into a modern observability stack.

- Efficient Ingestion and Optimization

- Step-by-step guidance on ingesting logs from various sources into VictoriaLogs. This might include:

- Using popular agents like Vector, Telegraf, and Opentelemetry collector.

- Direct ingestion via API.

- Techniques for optimizing your log pipelines, such as:

- Filtering irrelevant logs at the source to reduce volume.

- Parsing and structuring logs for efficient querying.

- Using Recording rules for summarizing logs as metrics

- Enriching logs to add app owners to log data

- Step-by-step guidance on ingesting logs from various sources into VictoriaLogs. This might include:

- Best Practices for Streamlining Your Logging Processes

- Establishing clear logging standards across your organization.

- Implementing a centralized logging strategy.

- Choosing the right log levels (DEBUG, INFO, WARN, ERROR, FATAL) for different situations.

- Using structured logging formats (like JSON) for easier parsing and analysis.

- Incorporating contextual information (e.g., user IDs, transaction IDs) into your logs.

- Enhancing Performance and Scalability

- Understanding VictoriaLogs’ architecture and how it scales.

- Tuning VictoriaLogs for optimal performance based on your specific needs.

- Monitoring the health and performance of your VictoriaLogs deployment.

- Real-World Examples

- Examples could include:

- Troubleshooting application errors using log data.

- Identifying performance bottlenecks.

- Detecting security threats.

- Monitoring user behavior.

- Improving resource utilization.

- Examples could include:

- Q&A with the VictoriaLogs Team

Key Takeaways

#

- VictoriaLogs provides a powerful and efficient solution for managing large volumes of log data.

- Optimizing your log pipelines is crucial for gaining valuable insights and reducing costs.

- Best practices, such as structured logging and filtering, can significantly improve your logging efficiency.

- VictoriaLogs is designed for scalability and performance, allowing you to handle growing log volumes with ease.

Resources for Further Learning

#

- Click here for the live stream, or to set a 🔔 reminder on YouTube

From Chaos to Clarity With Victorialogs - Get hands on with VictoriaLogs today and experience the benefits firsthand!

https://victoriametrics.com/products/victorialogs/

Leave a comment below or Contact Us if you have any questions!

comments powered by Disqus