This blog post is also available as a recorded talk with slides.

Table of Contents

Performance optimization techniques in time series databases:

- Strings interning;

- Function caching (you are here);

- Limiting concurrency for CPU-bound load;

- sync.Pool for CPU-bound operations.

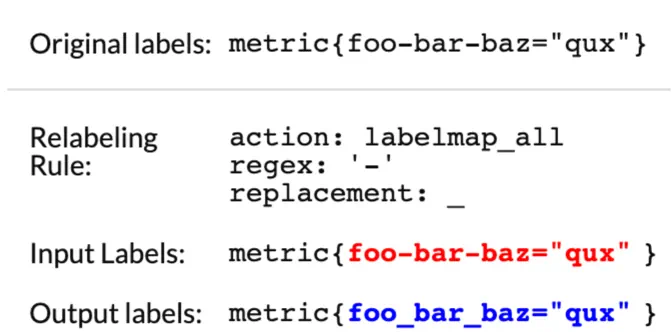

Relabeling is an important feature that allows users to modify metadata (labels) of scraped metrics before they ever make it to the database.

As an example, some of your scrape targets may generate metric labels with underscores (_),

and some of your targets may generate labels with hyphens (-). Relabeling allows you to make this consistent,

making database queries easier to write:'

An example of relabeling rule to replace hyphens with underscores. You can play with VictoriaMetrics' relabeling functionality in our playground.

An example of relabeling rule to replace hyphens with underscores. You can play with VictoriaMetrics' relabeling functionality in our playground.

Relabeling, if defined, happens every time vmagent scrapes metrics from your targets, but as we’ve seen before,

vmagent is likely to see the same metric label many times. That means if we once saw foo-bar-baz and changed

it to foo_bar_baz, then it is very likely we’ll have to do the same transformation on the next scrape as well.

In this case, caching the results of the relabeling function is likely to reduce CPU usage.

Internally, we implement caching for relabeling functions via struct called Transformer:

type Transformer struct {

m sync.Map

transformFunc func(s string) string

}

Transformer contains a sync.Map for thread-safe access to cached results,

and a function transformFunc that will do the actual relabeling.

Transformer implements function Transform which we use during relabeling:

func (t *Transformer) Transform(s string) string {

v, ok := t.m.Load(s)

if ok {

// Fast path - the transformed `s` is found in the cache.

return v.(string)

}

// Slow path - transform `s` and store it in the cache.

sTransformed := t.transformFunc(s)

t.m.Store(s, sTransformed)

return sTransformed

}

The Transform function first checks the cache using the Load function. If a cached result is found,

then it returns the result from the cache. Otherwise, it will call transformFunc to do the transformation,

store the result in the cache, and return it.

As an example, here’s a Transformer that replaces any character not allowed in Prometheus data model

with an underscore:

// SanitizeName replaces unsupported by Prometheus chars

// in metric names and label names with _.

func SanitizeName(name string) string {

return promSanitizer.Transform(name)

}

var promSanitizer = NewTransformer(func(s string) string {

return unsupportedPromChars.ReplaceAllString(s, "_")

})

var unsupportedPromChars = regexp.MustCompile(`[^a-zA-Z0-9_:]`)

In the above example, promSanitizer is created using our Transformer constructor. This constructor creates

a new sync.Map, and stores the reference to the passed function. Now we can use SanitizeName function

in the code “hot path” to sanitize scraped label names.

Function result caching allows you to trade off reduced CPU time for increased memory usage in certain cases. It works best when caching CPU-heavy functions that take a limited amount of possible values. Examples of CPU-heavy functions include those that do string transforms or regex matching.

Summary

#

VictoriaMetrics uses function result caching for its relabeling feature, but doesn’t use it for caching database queries. In the case of database queries, the range of possible values is too large, and it’s likely our cache hit rate would be low. As with strings interning, functions results caching works the best if number of cached variants is limited, so you can achieve high cache hit rate.

Stay tuned for the new blog post in this series!